Shiver me timbers! I have been listening to multiple podcast interviews with AI pioneers and finally decided how to repurpose an end of life out of support server from work: setup my own little LLM! Little did I know how easy it is, and you do not even need an end of life out of support server from work to do this! I already have multiple LLM models on my laptop as well!

Read on for how you can do this as well!

Background

So my recent P(doom) post has led me down a rabbit hole of seeing all sorts of really cool movies and listening to lots of podcasts with AI pioneers:

- Dwarkesh Podcast: Richard Sutton – Father of RL thinks LLMs are a dead end

- Lex Fridman: #416 – Yann Lecun: Meta AI, Open Source, Limits of LLMs, AGI & the Future of AI

- Lex Fridman: *#475 – Demis Hassabis: Future of AI, Simulating Reality, Physics and Video Games

and that triggerd an itch to finally begin playing with this technology instead of just consuming it. So I figured I would need a big server with a NVIDA GPU to scratch the itch. I happen to have access to an end of life out of support server from work that was decommissioned earlier in the year that I have been trying to think what to do with it. My first idea was to install Proxmox to create my own hyper scaler, and even installed Proxmox but realized I had not partitioned the disks quite right and never got back to it. So I decided why not just install Debian and play with LLMs!

Rabbit hole

Once Debian was installed I asked ChatGPT how to get started and it recommended starting with Ollama and I kinda just thought that was Meta’s open source Llama LLM which had been my initial idea to play with after listening to the interview with Yann Lecun.

So I followed the instructions:

root@ganymede:/home/andrew# curl -fsSL https://ollama.com/install.sh | sh

>>> Installing ollama to /usr/local

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

>>> The Ollama API is now available at 127.0.0.1:11434.

>>> Install complete. Run "ollama" from the command line.

that was easy and painless. and now what?

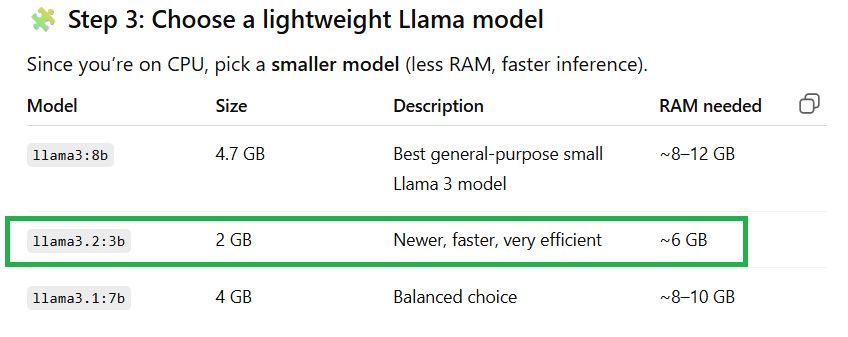

ChatGPT recommended running llama3.2 that was new and efficient on a device without GPU:

So I gave it a test:

root@ganymede:/home/andrew# ollama run llama3.2

pulling manifest

pulling manifest

pulling 6a0746a1ec1a: 100% ▕██████████████████████████████████████████████████████████▏ 2.0 GB

pulling 4fa551d4f938: 100% ▕██████████████████████████████████████████████████████████▏ 12 KB

pulling 8ab4849b038c: 100% ▕██████████████████████████████████████████████████████████▏ 254 B

pulling 577073ffcc6c: 100% ▕██████████████████████████████████████████████████████████▏ 110 B

pulling 3f8eb4da87fa: 100% ▕██████████████████████████████████████████████████████████▏ 485 B

verifying sha256 digest

writing manifest

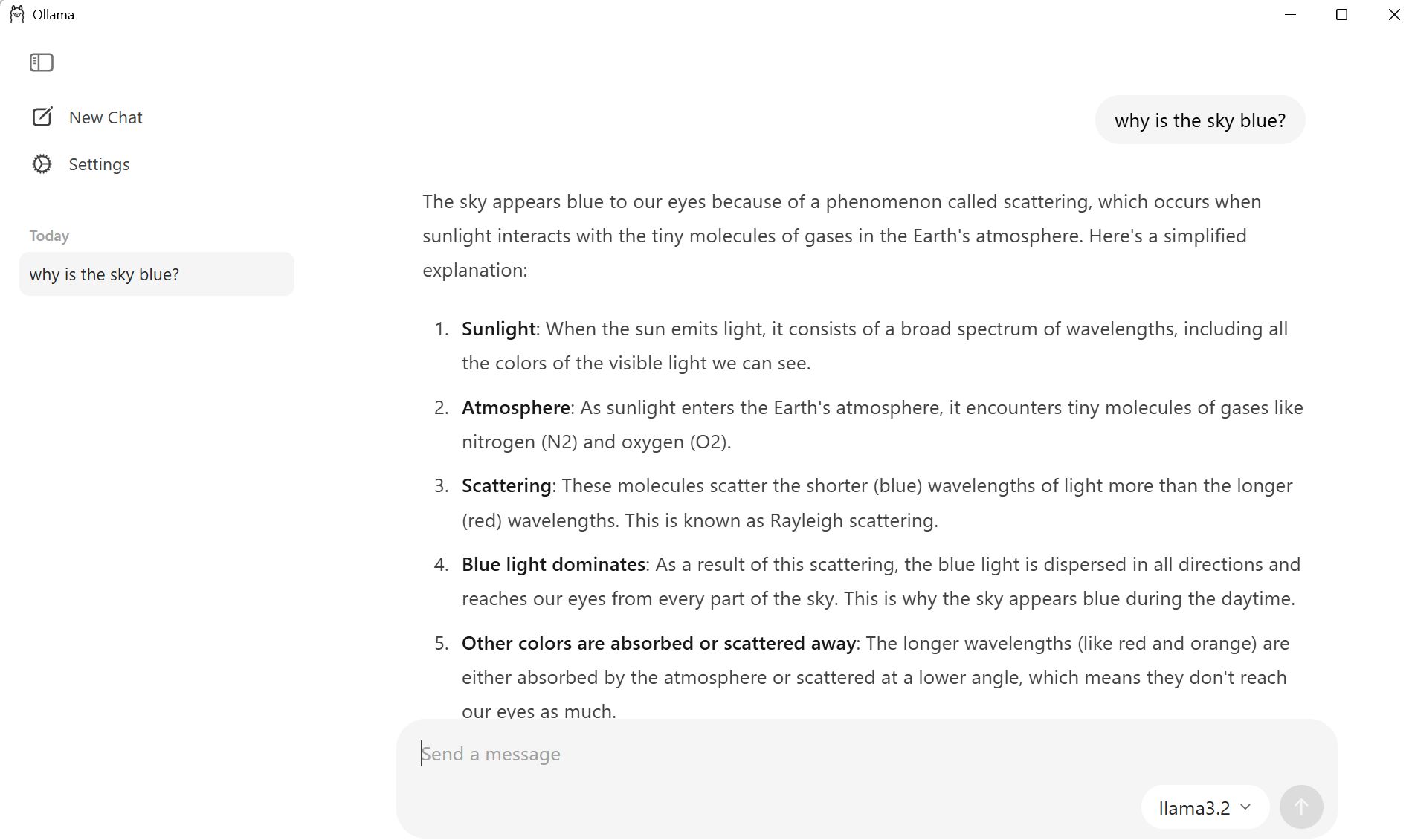

>>> why is the sky blue?

The color of the sky appears blue because of a phenomenon called Rayleigh scattering.

Here's what happens:

1. When sunlight enters Earth's atmosphere, it encounters tiny molecules of gases such as nitrogen (N2) and oxygen

(O2).

2. These gas molecules scatter the light in all directions.

3. The shorter, blue wavelengths of light are scattered more than

...

OMG. and just like that I have my own offline LLM? That is crazy.

Let me see how much space this taking up on my end of life out of support server:

root@ganymede:/home/andrew# ollama list

NAME ID SIZE MODIFIED

llama3.2:latest a80c4f17acd5 2.0 GB 6 minutes ago

Two gigabytes and this thing knows everything¿?¿?¿? I am big fan of offline things and used to always have an offline version of the Wikipedia on my mobile phones until the english version got too big and phones stopped having SD card slots and I think the last I had was pushing close to 60 GB!

Waitaminute here. If that was so easy, does Ollama have a windows version that I could run on my laptop?

Yes siree bob!

and it even has a gui front end with a history:

Wow. I am just in awe. speechless. This is crazy. I do not even need the end of life out of support server, but now that I have it, let’s explore more!

Experimenting with my own LMM

Please note: all of these examples were quick and dirty, let’s see what is possible, copy and paste examples from output from ChatGPT. Now that I see how possible and easy this is, I am thinking of how to utilize this power for id’ing and tagging my 59,607 photos.

(1) Let’s have it review all the photos in a directory and then allow me to ask questions about content of the photos

first I installed the needed tools:

pip install torch torchvision faiss-cpu pillow numpy tqdm open_clip_torch --break-system-packages

then created a photo_search.py script:

1 | import os |

then I copied all my September photos to /home/andrew/photos and gave it a test run:

root@ganymede:/home/andrew# python3 photo_search.py

Found 105 images

Embedding images...

100%|██████████████████████████████████████████████████████████████████████| 105/105 [00:29<00:00, 3.56it/s]

Search for: dog

Top matches:

1. /home/andrew/photos/PXL_20250911_193650822.jpg (score: 0.264)

2. /home/andrew/photos/PXL_20250906_131654031.MP.jpg (score: 0.259)

3. /home/andrew/photos/PXL_20250911_181236959.jpg (score: 0.258)

4. /home/andrew/photos/PXL_20250901_082308584.jpg (score: 0.256)

5. /home/andrew/photos/PXL_20250903_112733955.MP.jpg (score: 0.253)

Search for:

holy sheep shooters! It took about 30 seconds to analyze the 130 images and my dog was in everyone of the 5 pictures!

I also tested searching for “cocktail”, “clock tower”, “bull”, and “mountain” and all the results came back instantly and were mostly correct!

(2) Let’s try and use it to id people in photos

First needed to install needed dependencies:

1 | sudo apt install cmake |

then I created a “known_faces” folder that I put a picture of each of my immediate family member:

/andrew/photos/known_faces/

├── me.jpg

├── wife.jpg

├── child 1.jpg

├── child 2.jpg

Then I created a photo_people_tag.py script:

1 | import os |

and I ran the script. This took longer to run, 13 minutes 21 seconds for the 105 images:

root@ganymede:/home/andrew# python3 photo_people_tag.py

Loaded 4 known people: ['me', 'wife', 'child 1', 'child 2']

100%|████████████████████████████████████████████████████████| 141/141 [13:19<00:00, 5.67s/it]

Tags saved to /home/andrew/photos/photo_tags.json

I reviewed the results and very impressive given only a single example of each family member!

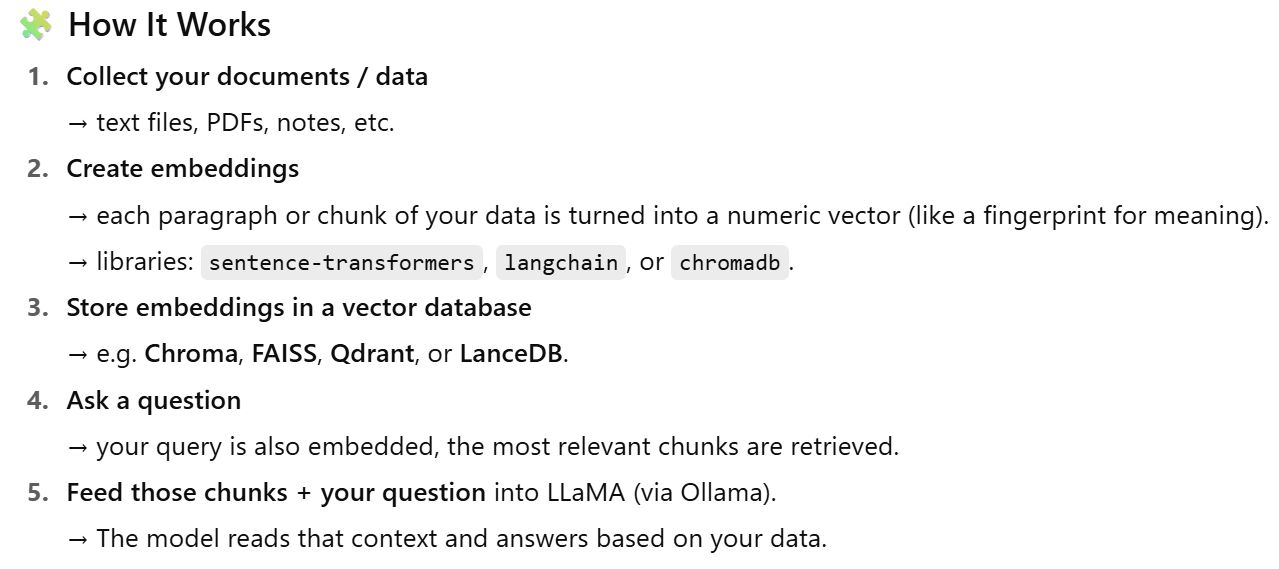

(3) Let’s try and have it ingest my own data and allow me to ask questions about it

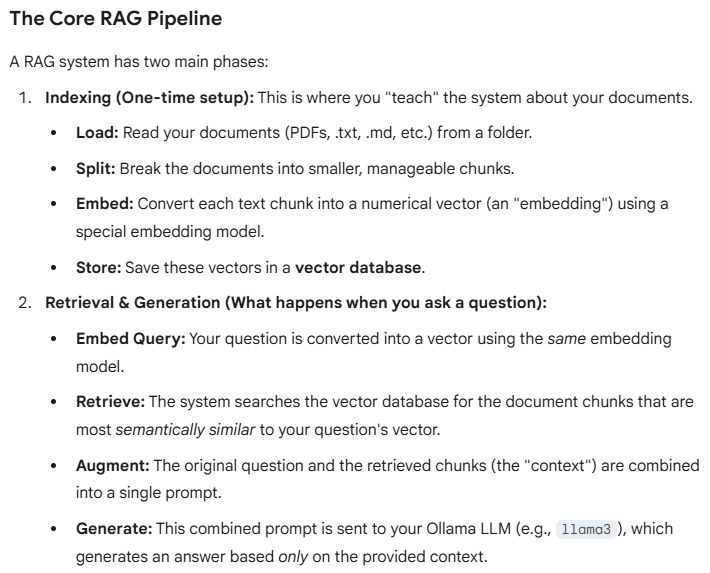

So here I learned to do this you do not need to “train” or “fine-tune” the model because that is really CPU and GPU demanding, rather you need to a RAG (Retrieval-Augmented Generation).

Here is what we need to do:

ok let’s install the dependencies:

pip install chromadb langchain ollama --break-system-packages

copy and paste provided script rag_qa.py:

1 | from langchain_community.llms import Ollama |

and fire it up:

root@ganymede:/home/andrew# python3 rag_qa.py

Traceback (most recent call last):

File "/home/andrew/rag_qa.py", line 4, in <module>

from langchain.chains import ConversationalRetrievalQAChain

ModuleNotFoundError: No module named 'langchain.chains'

and this crashed and burned. So I began letting ChatGPT know the errors and spent a couple hours and had no luck what so ever. In the end I used up my free ChatGPT credits and think it was just in a loop of changing one module for another… will have to try this again once I have more ChatGPT credits.

… couple hours later. I enabled Gemini in my Google Workspaces and asked it:

and it gave me the plan of attack:

first I installed the dependencies:

# Main orchestration library

pip install langchain langchain-community --break-system-packages

# For the local vector database

pip install chromadb --break-system-packages

# To load your documents (e.g., PDFs)

pip install pypdf --break-system-packages

and prepared ollmama:

# Pull a good instruction-following model

ollama pull llama3

# Pull a top-tier local embedding model

ollama pull nomic-embed-text

and created a my_documents folder and put a single pdf “a-history-of-the-world1.pdf” in it. Have no idea what the PDF is just searched and downloaded the first thing I found…

I then copied and pasted ingest.py script provided by Gemini:

1 | import os |

and will much trepidation after this mornings experience, i launched it and to my suprise it started doing things:

root@ganymede:/home/andrew# python3 ingest.py

Starting document ingestion...

100%|████████████████████████████████████████████████████████████████████| 1/1 [00:25<00:00, 25.25s/it]

Loaded 1008 documents.

Split documents into 5110 chunks.

embeddings = OllamaEmbeddings(model=EMBEDDING_MODEL)

Creating vector store and embedding documents (this may take a while)...

and it does appear to take awhile… has been going around 15 minutes now… maybe this I a function that would go quicker with a GPU? will have to ask Gemini once I have finished.

and after about a total of 25 minutes it finished:

--------------------------------------------------

✅ Success! Vector store created at: ./chroma_db

Total chunks indexed: 5110

--------------------------------------------------

Ok! nice! now we need to create a script to ask questions about my data. So i copied and pased query.py:

1 | import sys |

launched the script and oh nooooooooo:

root@ganymede:/home/andrew# python3 query.py

Traceback (most recent call last):

File "/home/andrew/query.py", line 5, in <module>

from langchain.chains import create_retrieval_chain

ModuleNotFoundError: No module named 'langchain.chains'

… lots of hours later and lots of testing asking all sorts of llm’s offline and on, and even good old Google searches I finally got this to work on my laptop, and even on my laptop it was not easy. I can only chalk this up to it would appear the different versions of python have made a big mess and made everthing way more complicated than things should be.

once working it is crazy powerful. The actual a-history-of-the-world1.pdf is 1008 pages long and any questions do take a few minutes to answer, but all that I asked it responded with answers that were quite interesting. Here are a couple examples:

andrew@Taygete:~$ python3 query.py

db = Chroma(persist_directory=CHROMA_DB_PATH, embedding_function=embeddings)

llm = Ollama(model=LLM_MODEL)

✅ RAG system is ready. Using LLM: llama3

Ask a question about your documents (type 'exit' to quit).

> give me a 5 line summary

Answer:

I don't know. The provided context appears to be excerpts from different sources praising a history book and expressing gratitude for help in writing it. There is no narrative or historical content that would allow me to provide a summary. If you meant something else, please clarify!

> tell me about the CHINA WAR

Answer:

Based on the provided context, here's what I can tell you about the China War:

The China War refers to the conflict between China and Japan that started in July 1937. It was a three-sided struggle between the Chinese Communists, Chinese Nationalists, and Japanese forces. The war began with a clash between Chinese and Japanese soldiers on an ancient bridge near Peking (now Beijing), known as the Marco Polo Bridge incident.

The Japanese army had been expanding its influence in northern China since 1933, but it was not until July 1937 that full-scale war broke out when Chiang Kai-shek chose to resist instead of being overawed. The war quickly spread from northern China, with the Japanese attacking Shanghai and capturing the Nationalist capital of Nanking by December 1937.

The Japanese suffered significant casualties, but the Chinese suffered far more, with estimates suggesting at least 370,000 Chinese deaths and widespread brutality, including mass killings, rape, and looting. The "rape of Nanking" in particular was a shocking event that left 20,000 Chinese civilians dead and became a byword for barbarity.

The war dragged on for years, involving multiple sides and factions, including the Chinese Communists, who sought to gain control over territories evacuated by the Japanese.

> give me an exectuive summary of the CUBAN MISSILE CRISIS and players

Answer:

Based on the provided context, here is a brief executive summary of the Cuban Missile Crisis and its key players:

**Summary:** In 1962, Soviet Union Premier Nikita Khrushchev secretly installed nuclear-capable ballistic missiles in Cuba, just 90 miles from the United States. The crisis was sparked when a U-2 spy plane detected the presence of these missiles, leading to a tense standoff between the US and the USSR. After several days of negotiations, Khrushchev agreed to dismantle the missile sites in exchange for a US promise not to invade Cuba.

**Key Players:**

1. **John F. Kennedy**: The 35th President of the United States, who faced the crisis during his presidency.

2. **Nikita Khrushchev**: The Premier of the Soviet Union, who ordered the installation of missiles in Cuba.

3. **Fidel Castro**: The leader of Cuba, who allowed the Soviet Union to install the missiles on Cuban soil.

I hope this summary meets your requirements!

Closing

Wow. Had no idea what was possible. Experiments 1 and 2 were done on a Friday afternoon and every piece of code just worked the first time. Crazy. Love afternoons like this! Experiment 3 I did on Saturday morning during coffee in bed time, and was quite frustrating. But then I got up, took the dog out, made some bread, and while it was rising gave it another attempt but using Gemini, first time I have ever tried it, and it came to the rescue and appeared to save the day, but I stil was fighting with experiment 3 until close to midnight to get it to work. I am impressed with how Gemini works: appears to have a much more structured aproach to finding a solution when things don’t work. Only problem is I ran out of credits early in the afternoon and was left on my own. In any event, these three tests will leave me thinking about utilizations, and I am already planning to make much more use of ollama as an offline llm, so all in all has been a very productive 24 hours!

Links, References and things that helped with this

- Ollama

- Ollama Explained: Transforming AI Accessibility and Language Processing

- Best Ollama Models 2025: Performance Comparison Guide

- Which is the smallest, fastest text generation model on ollama that can be used for chatbot?

- Ollama commands:

- start the server:

ollama serv - list models already downloaded:

ollama list - download a model:

ollama pull (name of model) - delete a model:

ollama rm (name of model) - run a model:

ollama run (name of model) - run a model and have it output performance stats:

ollama run (name of model) --verbose

- start the server:

- Model runs with Ollama and results:

Model Size what is cat6 Notes llama3.2:1b 2.0 GB 38.9589416s LLaMA large language model, specifically designed for text-to-text tasks such as question answering, text classification, and more. Runs well on both ganymede and laptop llama3.3:70b-instruct-q4_K_M 42 GB 13m49.371253397s LLaMA large language model with 70 billion parameters, making it one of the largest LLaMA variants. Would not run on laptop, and on ganymede took 13 minutes to answer cat6 questions deepseek-r1:8b 5.2 GB 3m1.9106937s Has a much diffent way of responding to questions! So interesting! phi4:14b 9.1 GB 2m7.3596856s Phi-4: Microsoft’s Efficient Model. Well formed resonse, but too slow on my laptop. llama3.1:8b 4.9 GB 1m23.923619948s LLaMA large language model with 8 billion parameters. Nice well prepared response. Also seems quite quick on ganymede. gemma3:4b 3.3 GB 1m38.350735035s GEMMA (Generalized Entity Modeling for Multi-Task Applications) large language model architecture. Fast on ganymede. Provided most complete answer to what is cat6 with URL references and even a table comparing different standards. gemma3:1b 778 MB 39.0868762s Smallest I tested and supper fast on my laptop and ganymede. Tested with spanish translations as well and works well.

Thanks for reading and feel free to give feedback or comments via email (andrew@jupiterstation.net).