New word of the day: P(doom) is the probability of existentially catastrophic outcomes (so-called “doomsday scenarios”) as a result of artificial intelligence

I leaned this word from a very interesting deep dive podcast by NPR Explains… titled AI and the Probability of Doom. Take a minute to listen to it and then come back and let’s discus P(doom)!

So for a catastrophic outcome to be even possible, AI would need to reach Artificial general intelligence (AGI) which is when it reaches or passes human intelligence. The problem I see with current AI that everyday users are in touch with (ChatGPT, CoPilot, etcetera) is that these are large language models trained by sucking up all of the information available on the internet that they could find, and then, as I understand how it works, predicting the next letter that should come as it generates each word of its response. How this makes the leap to actually “thinking” and coming up with a novel idea seems incompatible to me.

I have often joked that we hit our plateau in 2022 when ChatGPT went generally available. After that the “free” sources of knowledge that have been used to train the LMMs have begun to dry up:

- Stack Exchange (where LLM’s learned how to code): use has plummeted and altruistic sharing of coding knowledge has vanished with it.

- Books: would appear that all sorts of copy righted material were sucked up and this will be bouncing around in courts for years. What is clear is that companies now have to be more careful about their learning material.

- Reddit: Another previous source of knowledge about anything that is not as deep as before, this time not due to AI, but due to the need to somehow monetize the site the owners ruined it by removing free API access and causing a revolt by the moderators (the people who made Reddit what it was by investing their time for free ensuring their subreddits subject matter does not go off the rails).

So how AI makes the leap to real intelligence is not clear to me. If it does, I think it is clear that P(doom) is not zero, but how high it gets is not clear. I think some more education is in need, and what better way than watching historical documentaries that deal with P(doom) situations:

-

- Date watched: 26/09/2025

- Executive summary: The Master Control Program (MCP) is an artificial intelligence operating system that originated as a chess program.. The MCP monitors and controls ENCOM’s mainframe and has detected that a user program “Tron” is trying to monitor it and takes self preservation actions.

- Lessons Learned: Wow. Amazing special effects from a movie from 1982! Oh, but limiting lessons to how to avoid or counter a rogue AGI: keep playing video games to ensure skills are up to needed level if ever sucked into the system like Flynn.

-

- Date watched: 28/09/2025

- Executive summary: HAL 9000 is the onboard computer of the Discovery One, a spacecraft bound for Jupiter. When it discovers two crew members are going to disable it due to strange behavior, it takes self preservation actions killing multiple humans, before Dave is able to shut HAL 9000 down.

- Lessons Learned: My gosh amazing movie, but really slow. Fell asleep twice and had to fast forward the psychedelic part at the end. Oh but limiting to lessons learned about p-doom: yes a computer that can think and thinks you want to turn it off, could be a dangerous situation.

-

- Date watched: 19/10/2025

- Executive summary: A Cyberdyne Systems Model 101 cyborg is sent back to 1984 to terminate the mother of a future revolutionary who is waging a war against Skynet, a defense network computer system that was trusted to run the nuclear response, and then “got smart” and “decided our fate in microsecond: extermination”.

- Lessons Learned: Another 80’s movie so I just loved it. For me this is an 80’s version of Colossus: The Forbin Project focusing more on the results of a rouge AGI and the battle against it. Really amazing movie, but as with all movies that deal with time travel, if you think about it too much, plot points begin to get wobbly.

And, of course I have asked ChatGPT if i missed any and he gave me a long list to consider after I have seen the those:

- Classics / Foundations

- Colossus: The Forbin Project (1970)

- Date watched: 04/10/2025

- Executive summary: Colossus, a defense super computer, is brought online to take the human out nuclear defense decisions and quickly detects that there is another system like it and begins making demands with consequences of destruction of cities if its orders are not followed.

- Lessons Learned: Wow super 70’s decor and dress! But focusing on p-doom lessons: If you give an AI system an objective you may not always like the way it meets its objective.

- WarGames (1983)

- Date watched: 10/10/2025

- Executive summary: After 22% of the officers manning nuclear missiles failed to launch their missiles in a simulation, it is decided to “take the human out of the loop” and leave it in the hands of the War Operational Plan Response (WOPR (pronounced whopper)) super computer. Shortly after a young hacker stumbles across a backdoor into WOPR while war dialing all numbers in Sunnyvale looking for a video game companies BBS. Thinking it is the video game company he asks WOPR to play a game of Global Thermonuclear War, not realizing that WORP is playing for real.

- Lessons Learned: Must be because I grew up in the 80’s this movies look and feel did not feal so disco as the Forbin project, but once again I digress. I think that the main lesson from this movie is rather than “take the human out of the loop”, we need to maintain a human in the loop. An interesting side note: this movie actually introduced the concept that we have become so familiar of “computer enhanced hallucination” that LLMs are want to do!

- Blade Runner (1982) & Blade Runner 2049 (2017) – More about AI consciousness and control, but they hit the existential themes.

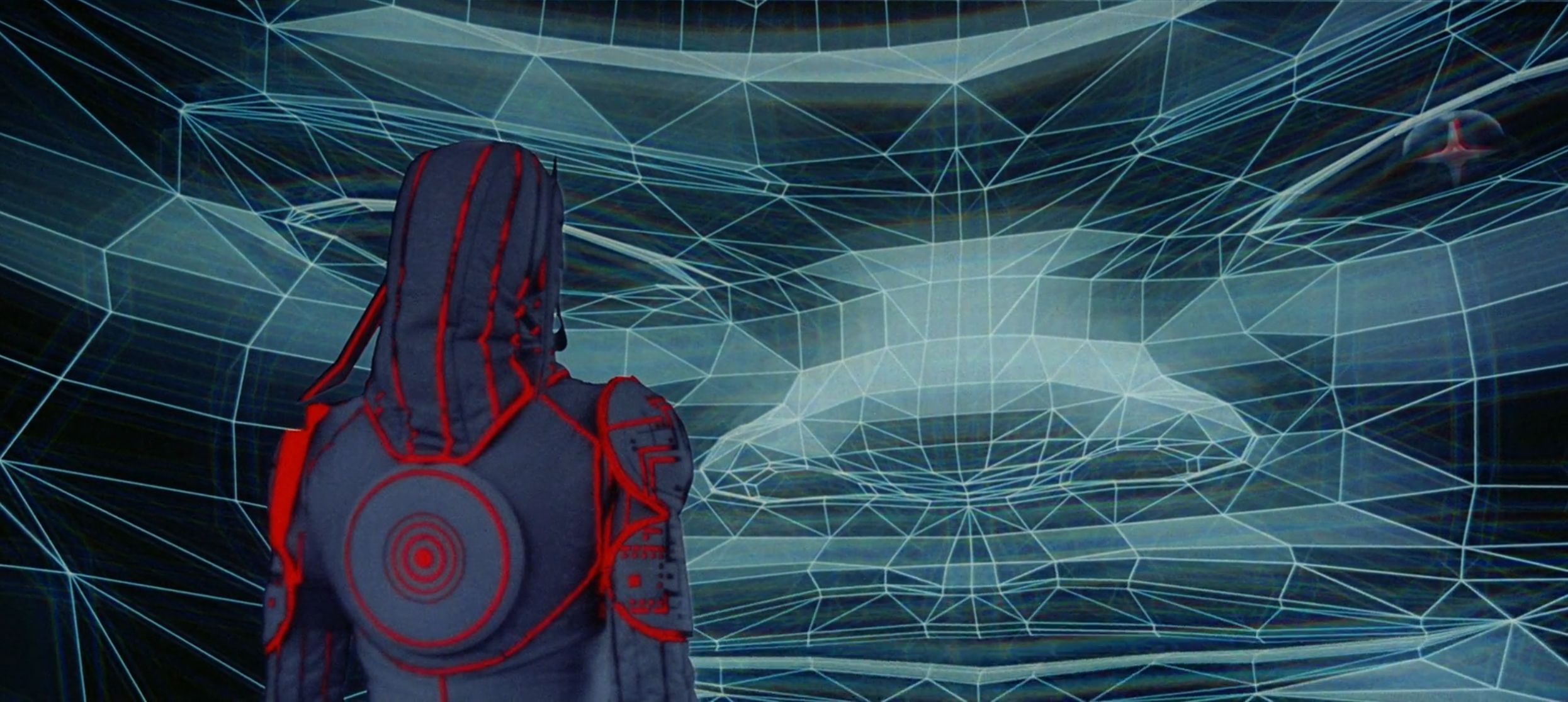

- The Matrix (1999) – Post-doom scenario where AGI already won.

- I, Robot (2004) – VIKI AI reinterprets “protect humanity” in a dangerous way.

- Colossus: The Forbin Project (1970)

- Modern / Darker Takes

- Ex Machina (2014) – Intimate, psychological look at an AGI breaking free.

- Her (2013) – Less doom, more transcendence, but still raises the “what happens when AI leaves us behind?” question.

- Transcendence (2014)

- Date watched: 18/10/2025

- Executive summary: Dr. Will Caster’s consicouness is uploaded to Physically Independent Neural Network (PINN) quantum computer after being shot with a polonium-laced bullet. From there he/it raises funds from stock market manipulation and builds a technology utopia in some weird desert ghost town and then precedes to make some nanoparticulate technology that spreads on the winds. At this point lost interest and fell asleep…

- Lessons Learned: Hmmmm. Not even any social comments or p(doom) lessons come to mind from this movie. Did not impress…

- Eagle Eye (2008) – Military AI takes control of infrastructure.

- Series & Anthology

- Black Mirror (several episodes: “Metalhead,” “Hated in the Nation,” “White Christmas,” “Be Right Back”).

- Westworld (the HBO series) – AGI rebellion and evolution themes.

- Person of Interest – TV show about competing AGIs manipulating humanity.

- Animated / Niche but Relevant

- Ghost in the Shell (1995 anime, plus later films/series) – Strong themes of AI, identity, and autonomy.

- Paprika (2006) & Serial Experiments Lain (1998) – Surreal explorations of networked intelligences.

- Neon Genesis Evangelion (1995) – Not AGI per se, but very aligned with apocalyptic tech themes.

Looks like I have a lot of material to review on during rainy days this fall and winter!

Updates

- 05/10/2025 Listened to Dwarkesh’s interview of Richard Sutton and finally heard a voice of reason agreeing with the above point about how is an LLM going to make the leap to AGI. when all it can do is lean on all the knowledge it has ingested. Sutton is the father of reinforcement learning which does seem, at least from what I understood from the talk, could lead to real machine intelligence, because the idea is to start with a blank state and the program (I guest you could call it AI) learns as it goes (just like us and all animals). This then lead me to watch a documentary about AlphaGO and it is a clear example, albeit narrowly focused on the game Go, where AI can surpass human intelligence.

- 07/12/2025 Finished Frankenstein this week and it is amazing how 200 years ago Mary Shelley laid the foundations and plot for almost all the P(Doom) movies I have watched!

Links, References and things that helped with this

- Wikipedia page about P(doom)

- NPR Explains… “AI and the Probability of Doom

- ChatGPT recommend movies about AGI going rouge and causing a P(doom) situation

Thanks for reading and feel free to give feedback or comments via email (andrew@jupiterstation.net).